Summary: This is the first time a machine has been trained to perform medical treatments by watching video of a skilled surgeon, bringing the field of robotic procedure one step further. With the help of “imitation learning,” robots can study difficult tasks without having to program them for every movement.

The robot’s ability to adapt and also correct its actions was demonstrated by its training on clinical footage, which allowed it to perform tasks like human surgeons. According to researchers, this method may speed up and speed up robot surgery, opening the way for fully automatic surgeries in the future.

The technology adapts language models to handle mechanical motion using the same fundamental AI principles as vocabulary models. The study was improve precision and reduce health errors, changing the surgical field.

Important Information:

- Similar to human doctors, robots trained through video copy performed surgeries more efficiently.

- This AI process accelerates training for mechanical therapies, enabling quicker, more flexible understanding.

- The unit uses machine learning related to ChatGPT, but in the “language” of mechanical mechanics.

Origin: Johns Hopkins Medicine

A machine, trained for the first time by watching videos of skilled doctors, executed the same surgical techniques as effectively as , the animal doctors.

The ability to train medical robots using imitation learning eliminates the need to program them for each individual move made during a medical procedure, bringing mechanical surgery close to real autonomy, where robots can carry out complex operations without the aid of humans.

Axel Krieger, a senior author, said,” It’s really wonderful to have this design, and all we do is pull it camera input, and it can forecast the mechanical movements needed for surgery.” We think that this represents a major advance in health automation.

This week’s top technology and machine learning meeting, the Conference on Robot Learning in Munich, will highlight the research conducted by Johns Hopkins University researchers.

The group, which included Stanford University researchers, used copy learning to teach the de Vinci Surgical System robot to perform basic medical procedures: manipulating a needle, lifting body tissue, and suturing.

The model used the same machine learning infrastructure that drives ChatGPT to combine copy learning. But, where ChatGPT works with words and word, this concept speaks “robot” with kinematics, a speech that breaks down the angles of mechanical motion into mathematics.

The experts fed lots of videos that were gathered during medical procedures from elbow cameras mounted on da Vinci robots. These movies, recorded by doctors all over the world, are used for post-operative study and therefore archived.

Almost 7, 000 da Vinci computers are used widespread, and more than 50, 000 doctors are trained on the structure, creating a huge library of information for robots to “imitate”.

While the da Vinci system is widely used, researchers say it’s notoriously imprecise. However, the team managed to exploit the flawed input. The key was to teach the model to perform inaccurate relative movements rather than absolute actions.

Lead author Ji Woong” Brian” Kim said,” All we need is image input, and then this AI system finds the right action.”

Even after a few hundred demos, the model can learn the procedure and apply it to unfamiliar environments.

The team trained the robot to perform three tasks: manipulate a needle, lift body tissue, and suture. The robot trained on the team’s model performed the same surgical procedures as skilled humans could.

,” Here the model is so good learning things we have n’t taught it”, Krieger said. ” Like if it drops the needle, it will automatically pick it up and continue. This is n’t something I taught it do”.

According to the researchers, the model could be used to quickly train a robot for any surgical procedure. The team is now using imitation learning to teach a robot how to perform full operations as well as small surgical procedures.

Before this advancement, hand-coding was required to program a robot to perform even the simplest operation. Someone might spend a decade trying to model suturing, Krieger said. And that’s suturing for just one type of surgery.

” It’s very limiting”, Krieger said. We can train a robot to learn different procedures in a few days, which is what’s new here. It enables us to achieve more accurate surgery while accelerating our pursuit of autonomy.

Samuel Schmidgall, Associate Research Engineer Anton Deguet, Associate Professor of Mechanical Engineering Marin Kobilarov, and Associate Professor of Mechanical Engineering are among the Johns Hopkins authors. Authors at Stanford University are PhD students Tony Z. Zhao.

About this research in robotics and AI

Author: Jill Rosen

Source: JHU

Contact: Jill Rosen – JHU

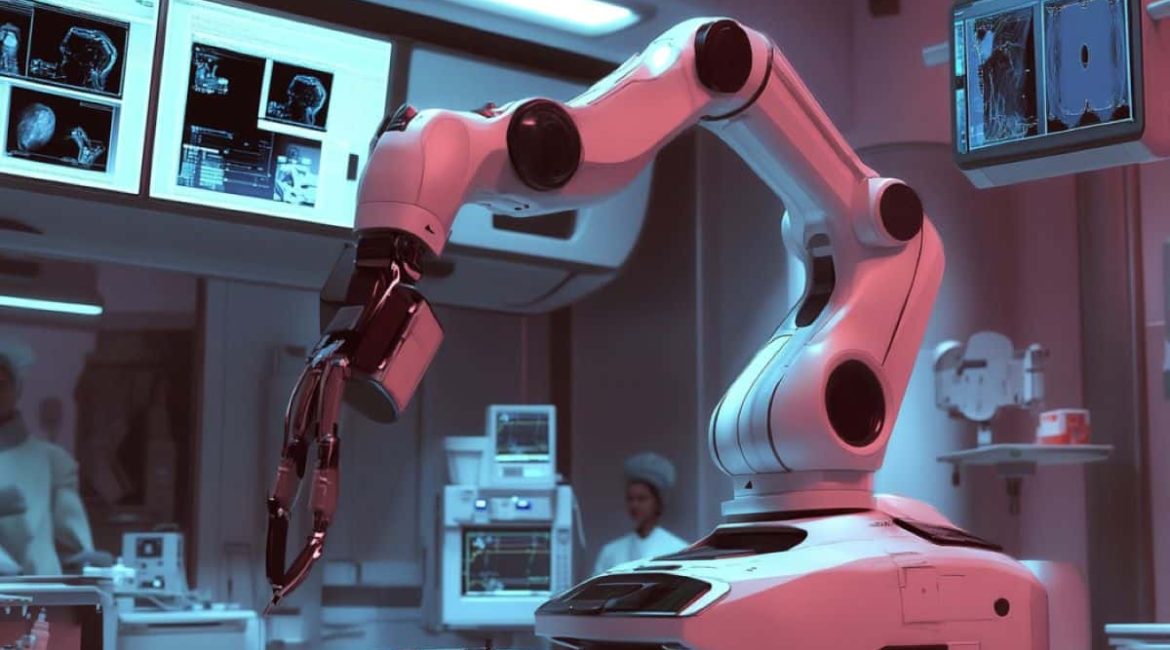

Image: The image is credited to Neuroscience News

Original Research: The findings will be presented at the Conference on Robot Learning