Summary: A medical robot that was trained on real-world procedures videos freely moved through a crucial phase of gallbladder removal, adapting to unexpected circumstances, and responding to voice commands. This discovery demonstrates how artificial intelligence is blend accuracy with the freedom required for clinical treatments.

Yet in difficult, variable circumstances, the robot demonstrated expert-level performance using a machine-learning framework similar to ChatGPT. This represents a significant step toward fully automatic, trustworthy medical systems that can assist or perform separately, according to researchers.

Important Information:

- Autonomous Adaptability: The machine adjusted to facial variations, unanticipated activities, and verbal changes in real-time.

- Learning about copy: It was reinforced by contextual job titles after watching videos of people surgeons.

- Properly completed a challenging, 17-task gallbladder elimination phase with results similar to those of experts.

Origin: JHU

Without the assistance of humans, a machine trained on videos of surgeries carried out a protracted phase of a liver removal procedure. The robot learned from voice commands from the team while performing the initial surgery on a vivid individual, similar to how a mentor works with a novice surgeon during the procedure.

Even in unexpected circumstances common of real life health emergencies, the machine performed flawlessly throughout trials and with the experience of a skilled individual surgeon.

The federally funded research, led by researchers at Johns Hopkins University, is a revolutionary development in medical robotics that enables robots to do with both mechanical accuracy and human-like adaptability and understanding.

Axel Krieger, a medical roboticist and nbsp, said,” This development moves us from drones that can perform certain clinical tasks to robots that truly understand operative procedures.”

This is a crucial distinction that brings us much closer to operating autonomous medical systems that are physiologically acceptable and stable in the chaotic, uncertain reality of patient care.

The results have been released now in Science Robotics.

The first endoscopic procedure on a pig was performed by Krieger’s Smart Tissue Autonomous Robot, STAR, in 2022. However, that robot needed particularly marked tissue, was operated in a strict, predetermined clinical schedule, and was operated in a very controlled environment. According to Kruger, it was similar to instructing a robot to travel along a carefully planned route.

But his fresh technique, he claims, “is like teaching a machine to manage any road, in any circumstance, and effectively respond to whatever it encounters.”

SRT-H, a surgical robot Transformer-Hierarchy, really performs operation, adapting to specific anatomical functions in real-time, making decisions quickly, and self-correcting when things don’t go as planned.

SRT-H, which was built with the same machine learning architecture that powers ChatGPT, is also interactive and capable of responding to verbal instructions ( such as “grab the gallbladder head” ) and corrections ( move the left arm a little to the left ). This feedback serves as a guide for the machine.

This work is a significant improvement over earlier ones because it addresses some of the biggest obstacles to the use of intelligent medical drones in the real world, according to lead author Ji Woong” Brian” Kim, a former Johns Hopkins postdoctoral researcher and current Stanford University.

Our research confirms that AI models may be made trustworthy enough for medical autonomy, something that was once thought to be remote but is now manifestly achievable.

The system was used by Krieger’s team last year to accomplish three fundamental medical tasks: manipulating a knife, lifting physique tissue, and suturing. These responsibilities only took a few seconds each.

The liver removal process is much more difficult, consisting of a minute-long series of 17 jobs. The machine had to pick specific conduits and arteries, perfectly pick them up, place clips strategically there, and cut apart parts with scissors.

SRT-H  learned how to perform gall bladder surgery by watching films of Johns Hopkins surgeries performing it on animal corpses. The team expanded the physical training by adding captions that describe the tasks. After watching the videos, the machine performed the operation with complete reliability.

The outcomes were similar to those of an expert surgeon, even though the robot surgeon took more than a human surgeon to perform the work.

This work, according to Johns Hopkins doctors Jeff Jopling, a co-author, “exemplifies the promise of developing autonomous mechanical systems in a also flexible and liberal manner” as medical residents frequently master various components of an operation at various rates.

The machine performed smoothly in all non-uniform neurological conditions and during surprising detours, such as when the researchers changed the creature’s starting place and when they added blood-like dyes that altered the appearance of the liver and surrounding tissues.

It truly demonstrates to me that it’s possible to perform challenging surgical procedures on your own, Krieger said.

This is a proof of concept that a highly robust copy learning model can manage such a complex process.

Next, the team wants to expand its capabilities to perform a complete autonomous surgery and train and test the system on more different types of surgeries.

Authors include Stanford University PhD student Lucy X. Shi, Johns Hopkins undergraduate Antony Goldenberg, Johns Hopkins postdoctoral fellow Paul Maria Scheikl, Johns Hopkins research engineer Anton Deguet, Stanford University assistant professor Chelsea Finn, and De Ru Tsai and Richard Cha of Optosurgical.

About this research in robotics and AI

Author: Jill Rosen

Source: JHU

Contact: Jill Rosen – JHU

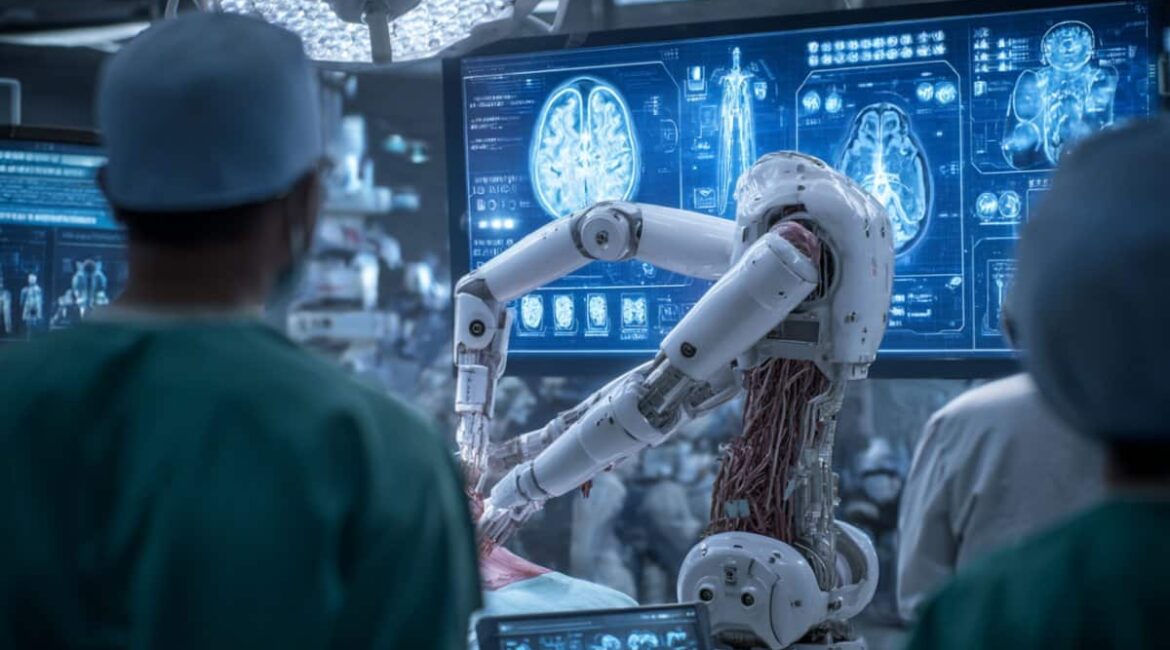

Image: The image is credited to Neuroscience News

Original research has been made private.

Axel Krieger and colleagues ‘ “SRT-H: A Language-Conditioned Imitation Learning System for Autonomous Surgery” is a hierarchical framework for autonomous surgery. Robotics for Science

Abstract

SRT-H: A Language-Conditioned Imitation Learning System for Autonomous Surgery

In controlled environments, research on autonomous surgery has primarily focused on simple task automation. However, robust generalization of human tissue’s inherent variability and dexterous manipulation are required for real-world surgical applications.

Using the current logic-based or conventional end-to-end learning strategies, these issues continue to be challenging.

To bridge this gap, we propose a hierarchical framework for performing long-range surgical dexterous steps. Our approach uses a high-level policy for task planning and a low-level policy for generating low-level trajectories.

The high-level planner creates task-level or corrective instructions that guide the robot through the long-horizon steps and assist in recovering from errors caused by the low-level policy in language space.

We conducted ablation studies to evaluate key components of the system and conducted ex vivo experiments on cholecystectomy, a frequently performed minimally invasive procedure.

Our approach operates completely autonomously and completely independently across eight different ex vivo gallbladders. The policy’s ability to recover from suboptimal states that are inevitable in the highly dynamic environment of realistic surgical applications was enhanced by the hierarchical approach.

This work represents a significant step in the development of autonomous surgical systems for clinical use. It also demonstrates step-level autonomy in a surgical procedure.